Please wait as we load hundreds of rigorously documented facts for you.

Please wait as we load hundreds of rigorously documented facts for you.

For example:

* Pollution is defined by the American Heritage Science Dictionary as the:

* A small amount of a given pollutant confined to a small area may cause harm, while a far larger amount of the same pollutant dispersed over a large area can be harmless.[2] A “fundamental principle” of toxicology is “the dose makes the poison.”[3]

* Per the academic book Chemical Exposure and Toxic Responses:

* Per the textbook Understanding Environmental Pollution:

* In addition to a substance’s chemical structure and dosage, other factors that affect its toxicity include (but are not limited to) the duration of exposure, the route of exposure (e.g., skin contact, inhalation, ingestion), and the physiology of the exposed organisms.[7] [8] [9]

* Per a teaching guide published by the American Society for Microbiology:

* Applied to humans, the results of the above study indicate that:

* A survey of about 5,600 people from eight countries published in 2019 by the journal Nature Chemistry found that 76% of European consumers believe that exposure to a “toxic synthetic chemical substance is always dangerous,” no matter what the level of exposure.[19]

* A scientific, nationally representative survey commissioned in 2020 by Just Facts found that 65% of U.S. voters believe that “contact with a toxic chemical is always dangerous, no matter what the level of exposure.”[20] [21] [22]

* The United States Environmental Protection Agency (EPA) monitors the outdoor (ambient) concentrations of six major air pollutants on a nationwide basis. These are called “criteria pollutants.”[28] [29] [30]

* Under federal law, criteria pollutants are those that are deemed by the administrator of the EPA to be widespread and to “cause or contribute to air pollution which may reasonably be anticipated to endanger public health or welfare….”[31] [32] [33]

* The six criteria pollutants are carbon monoxide, ground-level ozone, lead, nitrogen dioxide, particulate matter, and sulfur dioxide.[34]

* The EPA administrator is required by law to establish “primary” air quality standards for criteria pollutants that are “requisite to protect the public health” with “an adequate margin of safety….”[35] [36]

* The EPA administrator is also required to establish “secondary” air quality standards “requisite to protect the public welfare,” a term that includes “animals, crops, vegetation, and buildings.”[37] [38]

* For some criteria pollutants, EPA has established a single criterion as the primary and secondary air quality standard. In other cases, EPA has established up to two different criteria as primary air quality standards and up to two different criteria as secondary air quality standards.[39]

* Per an EPA summary of laws and court decisions relevant to the process of setting air quality standards:

* The administrator of the EPA is appointed by the president, contingent upon the approval of a majority vote in the Senate.[42] [43]

* According to primary EPA measures of criteria pollutants, the average U.S. ambient levels of:

* A scientific, nationally representative survey commissioned in 2019 by Just Facts found that 40% of voters believe the air in the United States is now more polluted than it was in the 1980s.[51] [52] [53]

* Per the American Heritage Dictionary of Science, carbon monoxide (CO) is:

* According to the EPA, the main sources of CO emissions in the U.S. are:

* Regarding the accuracy of EPA’s CO emissions data over time:

* Ambient CO concentrations typically peak near roadways and during the times of the day when commuting is heaviest.[64]

* The population most susceptible to elevated CO levels are those with coronary artery disease.[65] Coronary artery disease is typically caused by the build-up of cholesterol-containing deposits in major arteries.[66]

* The primary study used by the EPA to set clean air standards for CO was conducted on subjects with moderate to severe coronary artery disease, more than half of whom previously had heart attacks. To establish a baseline, participants engaged in mild exercise on a treadmill while measurements were made of the time it took to develop chest pain and a specific electrocardiogram signal that indicates insufficient oxygen supply to the heart. Subjects repeated this test after resting for about an hour while being exposed to elevated CO levels ranging from 42 to 202 parts per million (mean of 117). After exposure, the amount of time spent exercising before the onset of chest pain decreased by 4.2%, and the amount of time spent exercising before this specific electrocardiogram signal emerged decreased by 5.1%.[67] [68]

* An EPA primary clean air standard for carbon monoxide is an 8-hour average of 9 parts per million (ppm), not to be exceeded more than once per year.[69] [70] From 1980 to 2022, the average U.S. ambient carbon monoxide level decreased by 88% as measured by this standard:

* All of the U.S. population live in counties that meet EPA’s clean air standard for carbon monoxide.[73] [74] [75] Per the EPA, a “large proportion” of monitoring sites have CO levels that are below the limit that conventional instruments can detect (1 ppm).[76]

* Per the EPA, ground-level ozone (O3):

* According to the EPA, the main sources of NOx emissions in the U.S. are:

* According to the EPA, the main sources of VOC emissions in the U.S. are:

* Regarding the accuracy of EPA’s VOC emissions data over time:

* The populations most susceptible to elevated ozone levels are children, the elderly, people with lung disease, and people who are active outdoors.[89] [90]

* Ambient ozone concentrations typically peak on hot sunny days in urban areas.[91] Per the EPA:

* EPA’s primary and secondary clean air standard for ozone is 0.070 parts per million (ppm) as measured by a 3-year average of the fourth-highest daily maximum 8-hour concentration per year.[93] [94] From 1980 to 2022, the average U.S. ambient ozone level decreased by 29% as measured by this standard:

* According to EPA data, as of 2024, 37% of the U.S. population lives in counties that do not meet EPA’s clean air standard for ozone.[97]

* Before the EPA made the clean air standard for ozone stricter in 2015,[98] the EPA estimated in 2014 that:

* Lead (Pb) is a metallic element that can be released as particles into the air. These airborne particles can be directly inhaled, or they can settle out of the air into water and food supplies, and thus be ingested orally.[101] Lead can accumulate in the human body over extended periods, resulting in a condition known as “cumulative poisoning.” This can impair cognitive ability and cause conditions such as high blood pressure and kidney dysfunction.[102] [103]

* According to the EPA, the main sources of lead emissions in the U.S. are:

* Ambient lead concentrations typically peak near mines, busy roadways, and factories that melt or fuse lead.[107]

* The population most susceptible to elevated lead concentrations is children. Effects can include behavioral disorders, learning deficits, and lowered IQ.[108] [109] The EPA set the clean air standard for lead with the goal of precluding a mean IQ loss of more than one or two points among children exposed to this threshold.[110]

* EPA’s primary and secondary clean air standard for lead is a rolling 3-month average of 0.15 micrograms per meter (μg/m3).[111] From 1980 through 2018, the average U.S. ambient lead level decreased by 99% as measured at six sites by this standard. From 2010 to 2022, the average U.S. ambient lead level decreased by 88% as measured at more than 80 sites by this standard:

* According to EPA data:

* Nitrogen dioxide (NO2) is a highly reactive gas that can cause respiration problems.[116] [117]

* According to the EPA, the main sources of NO2 emissions in the U.S. are:

* Ambient NO2 concentrations typically peak near roadways. Per the EPA, NO2 monitors are “not sited to measure peak roadway-associated NO2 concentrations,” and thus, “individuals who spend time on and/or near major roadways could experience NO2 concentrations” that are 30% to 100% higher than monitors in that general area indicate.[121]

* The populations most susceptible to elevated NO2 levels are asthmatics and children.[122]

* An EPA primary and secondary clean air standard for nitrogen dioxide is an annual average of 53 parts per billion (ppb).[123] From 1980 through 2010, the average U.S. ambient nitrogen dioxide level decreased by 52% as measured by this standard:

* In 2010, the EPA created a new primary NO2 standard that supplements the preexisting standard. It is intended to provide increased protection against health effects associated with short-term exposures, as opposed to the preexisting standard, which is based on the average annual exposure. This newer standard is 100 parts per billion based on the 98th percentile of 1-hour daily maximum concentrations, averaged over 3 years.[126] [127] From 1980 through 2022, the average U.S. ambient nitrogen dioxide decreased by 65% as measured by this standard:

* According to the EPA, as of 2024, all of the U.S. population live in counties that meet EPA’s clean air standard for nitrogen dioxide.[130] [131]

* Per the EPA, particulate matter (PM):

* The EPA monitors the ambient concentrations of two major categories of particulate matter:

* The EPA has itemized numerous methods to control PM emissions including paving unpaved roads, swapping out wood-burning stoves for propane logs, and installing particle filters/collection devices on engines and factories.[136] [137] [138]

* The populations most susceptible to elevated PM levels are individuals with heart and lung diseases, the elderly, and children.[139]

* According to the EPA, the main sources of PM10 emissions in the U.S. are:

* EPA’s primary and secondary clean air standard for PM10 is a 24-hour mean of 150 micrograms per cubic meter (μg/m3), not to be exceeded more than once per year on average over 3 years.[145] From 1990 through 2022, the average U.S. ambient PM10 level decreased by 34% as measured by this standard:

* According to EPA data, as of 2024, 2% of the U.S. population live in counties that do not meet EPA’s clean air standard for PM10.[148]

* According to the EPA, the main sources of PM2.5 emissions in the U.S. are:

* An EPA primary clean air standard for PM2.5 is an annual mean of 12 micrograms per cubic meter (μg/m3), averaged over 3 years.[154] From 2000 through 2022, the average U.S. ambient PM2.5 level decreased by 42% as measured by this standard:

* According to EPA data, as of 2024, 7% of the U.S. population live in counties that do not meet EPA’s above-cited clean air standard for PM2.5.[157]

* In 2010, before the EPA made the above-cited clean air standard for PM2.5 stricter, the EPA estimated that 6% of the U.S. population lived in counties that did not meet this standard.[158]

* Sulfur dioxide (SO2) is a highly reactive gas that can cause respiration problems.[159] [160]

* According to the EPA, the main sources of SO2 emissions in the U.S. are:

* The population most susceptible to elevated SO2 levels is asthmatics. Among healthy non-asthmatics, SO2 does not typically affect lung function until concentrations exceed 1,000 parts per billion (ppb). Among asthmatics engaged in exercise, exposure to SO2 concentrations ranging from 200–300 ppb for 5–10 minutes have been shown to decrease lung function in 5–30% of these individuals.[166]

* Until 2010, EPA’s primary clean air standard for sulfur dioxide was an annual mean of 30 ppb.[167] From 1980 through 2010, the average U.S. ambient sulfur dioxide level decreased by 79% as measured by this standard:

* In 2010, the EPA changed the primary SO2 standard to 75 ppb, as measured by a 3-year average of the 99th percentile of 1-hour daily maximum concentrations. This standard is “substantially more stringent than the previous standards” and is intended to provide increased protection against health effects associated with short-term exposures.[171] [172] From 1980 through 2022, the average U.S. ambient sulfur dioxide level decreased by 94% as measured by this standard:

* According to EPA data, in 2024, less than 1% of the U.S. population live in counties that did not meet EPA’s primary clean air standard for sulfur dioxide.[175]

* After tobacco smoke, the second leading cause of lung cancer in the United States is radon, a gas that arises from the decay of natural uranium, which is common in rocks and soils.[176]

* The EPA estimates that 13% of lung cancer deaths in the U.S. are related to radon.[177] [178]

* Radon typically seeps up from the ground into houses via floors and walls. In houses with radon levels at or above 4 picocuries per liter of air (pCi/L), the EPA recommends taking mitigation actions.[179]

* As of 2017 in the United States:

* Water has a pH of 7 (neutral on the pH scale), but various natural and manmade substances in the atmosphere can combine with water to change its pH level. When rainwater has a pH lower than 5.0–5.6, it is considered acid rain.[182] [183]

* Acid rain can harm lakes, streams, aquatic life, buildings, crops, and forests.[184] [185]

* The Encyclopædia Britannica states that the:

* Like the Encyclopædia Britannica, EPA’s “Plain English Guide to the Clean Air Act” states that “sulfur dioxide (SO2) and nitrogen oxides (NOx) are the principal pollutants that cause acid precipitation” and attributes these emissions strictly to manmade sources.[187]

* According to the EPA, biogenic sources like trees and vegetation produce 12% of all NOx emissions in the U.S. and 0% of all SO2 emissions.[188] [189]

* A study published in the journal Nature in 2003 found that certain types of trees, which were thought to absorb more NOx than they emitted, actually emit more NOx than they absorb. Previous studies had underestimated these natural NOx emissions because scientists failed to replicate natural conditions by exposing the trees to ultraviolet light. Based upon the results of a study conducted under natural conditions, the study’s authors estimated that coniferous trees (such as spruce, fir, and pine) in the northern hemisphere may emit “comparable” amounts of NOx to “those produced by worldwide industrial and traffic sources.”[190] [191] [192]

* A 1989 paper in the journal Hydrobiologia faults “human activities” for the fact that “annual precipitation averages less than pH 4.5 over large areas of the Northern Temperate Zone, and not infrequently, individual rainstorms and cloud or fog-water events have pH values less than 3.”[193]

* Formic acid, an organic compound emitted by natural processes and human activities, can contribute to the acidity of rain, but it is not associated with the harmful effects of acid rain because it rapidly decomposes.[194] [195] [196]

* An academic text published in 2002 asserts that formic acid contributes “slightly” to rainwater acidity.[197]

* Based upon satellite measurements and computer models, a 2011 paper published in Nature Geoscience estimated that:

* Volatile organic compounds (VOCs) and nitrogen oxides (NOx) are the two primary precursors of ozone.[200] [201]

* According to the EPA, biogenic sources, like trees and vegetation, produce 64% of all VOC emissions and 12% of all NOx emissions in the U.S.[202] [203]

* A 2003 study published in the journal Nature estimates that coniferous forests in the northern hemisphere may emit “comparable” amounts of NOx to “those produced by worldwide industrial and traffic sources.”[204] [205] [206]

* From 2008 to 2015, EPA’s primary and secondary clean air standard for ozone was 0.075 parts per million (ppm) as measured by a 3-year average of the fourth-highest daily maximum 8-hour concentration per year.[207] [208] In 2015, the EPA lowered this to 0.070 ppm.[209] [210]

* Ozone concentrations in relatively remote U.S. wilderness areas often reach 0.050 ppm to 0.060 ppm, particularly at high-altitude locations. The EPA states that it is “impossible to determine” the causes of these elevated ozone levels using currently available data, but based upon computer models, the EPA attributes them to a combination of:

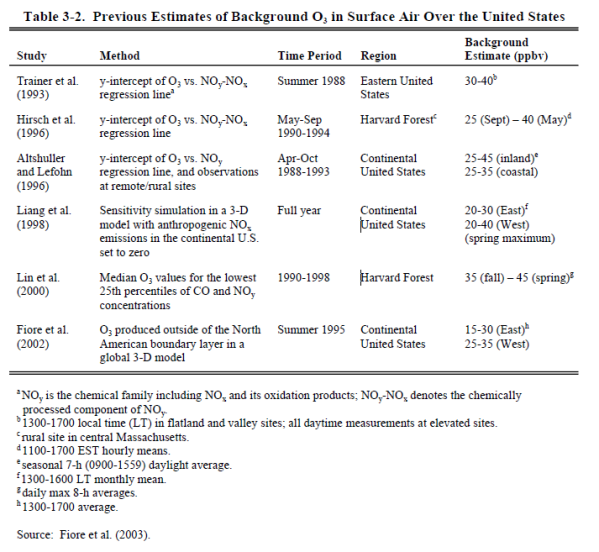

* The EPA estimates that natural ground-level ozone concentrations in the continental U.S. are roughly 0.015 ppm to 0.035 ppm and are typically less than 0.025 ppm “under conditions conducive to high O3 episodes.” Five other studies have produced results ranging from 0.020 ppm to 0.045 ppm.[212] The range of results from these six studies corresponds to natural ozone background levels that vary from 21% to 64% of EPA’s clean air standard.[213]

* For a study published in the journal Nature in 2003, scientists compared the growth of trees in New York City to genetically identical trees in surrounding suburban and rural areas.[214] Contrary to expectations, the trees in the city grew about twice as fast as those in the rural areas. The study’s lead author stated:

* Experiments performed for this same study showed that higher ozone levels in the rural areas negatively impacted the trees’ growth rates. Although the city had higher peak ozone levels than the rural areas, the rural areas had higher long-term average levels than the city. The study’s authors attributed these higher rural ozone levels to manmade ozone precursors blowing in from the city and to a “scavenging reaction” that limits ozone levels in urban areas. The authors did not address the prospect that the higher ozone levels in rural areas were related to natural sources.[216] [217]

* According to the EPA, wildfires and prescribed burns (to prevent wildfires and dispose of agricultural vegetative residue) produce:[218] [219] [220]

* Between 2008 and 2014 (based on respective studies conducted in 2012 and 2018), EPA’s emission estimates from wildfires and prescribed burns changed as follows:

* Over the same period (2008–2014), the number of acres burned through wildfires and prescribed burns decreased by 9%.[226]

* On average, Americans spend 87% of their time indoors, 8% outdoors, and 6% in vehicles.[227]

* Indoor levels of ozone are typically one-tenth that of outdoor levels. This is because ozone is removed from the air by interactions with surfaces such as walls, carpeting, and furnishings.[228] [229]

* Lead exposure can often be higher in homes than outdoors, and even greater lead exposures can occur in office buildings, older homes with lead paint, and homes of smokers.[230]

* Carbon monoxide levels are typically 2–5 times higher in vehicles than outdoors. These levels generally decline as traffic volume declines and as speed increases.[231]

* Carbon monoxide levels are generally higher in homes than outdoors, and even greater levels of CO have been measured in rooms where people are smoking, indoor ice rinks (from ice resurfacing machines), homes with attached garages in which cars are idled, and indoor arenas where motocross races and tractor pulls are held.[232]

* In nations where modern energy is unavailable or prohibitively expensive, people tend to burn more wood, animal dung, crop waste, and coal in open fires and simple home stoves. This produces elevated levels of toxic indoor pollutants, because the fuels are not burned efficiently.[233] [234] [235] [236]

* In addition to criteria pollutants, the EPA is required by law to regulate the emissions of substances that:

* These substances are called “hazardous” or “toxic” air pollutants.[239]

* Unlike criteria pollutants, the law requires the EPA to consider the costs of enacting regulations to control the emissions of hazardous air pollutants.[240] [241]

* Unlike criteria pollutants, the EPA does not monitor the national ambient levels of hazardous air pollutants.[242] [243] Instead, the EPA estimates annual emissions of these pollutants.[244]

* Based on EPA emission estimates and ambient air measurements of “a subset of air toxics concentrations in a few locations,” the EPA creates computer models to approximate ambient levels of air toxics across the U.S. and some of their impacts on human health.[245] [246] [247]

* Based on EPA’s computer models, air toxics from outdoor sources increase the average risk of cancer over the first 70 years of life by 0.003 percentage points.[248] [249] For comparison, the average risk of developing cancer by the age of 70 is 20%.[250]

* EPA’s estimates of cancer risk from air toxics:

* The EPA currently regulates 188 hazardous air pollutants and has singled out:

* Between a baseline period of 1990–1993 and 2014,[264] EPA’s estimates for the combined annual emissions of all hazardous air pollutants decreased by 58%.[265] This 58% decrease includes:

* Between a baseline period of 1990–1993 and 2014,[267] EPA’s annual emission estimates for the seven hazardous air pollutants believed to account for the greatest health risks changed by the following amounts:

|

Hazardous Air Pollutant |

Change |

|

acetaldehyde |

40% |

|

acrolein |

–7% |

|

benzene |

–58% |

|

1,3-butadiene |

–45% |

|

carbon tetrachloride |

–98% |

|

formaldehyde |

6% |

|

tetrachloroethylene |

–97% |

* Major categories of water bodies in the United States include:

* The amount of fresh water that resides under the surface of the earth is roughly 30 times greater than the world’s fresh surface waters. Such ground water feeds natural springs and streams and is used by humans for drinking, cleaning, agriculture, and industry.[277]

* Federal law requires that public water systems be tested for various contaminants and treated (if needed) to meet these standards. In 2019, 92% of public water system customers were served by facilities that had no reported violations of EPA’s health-based drinking water standards.[278] A caveat of this finding is that violations are reported by states, and the EPA has found cases in which violations were not reported.[279]

* Per a 2006 EPA report, “very little” lead in drinking water comes from water utilities. Instead, it primarily comes from indoor plumbing in public schools, apartments, and houses.[280] [281]

* Private wells are not regulated under federal law and, in most cases, they are not regulated under state law. During 1991–2004, the U.S. Geological Survey (USGS) measured contamination levels in 2,167 private wells used for household drinking water. The wells were tested for 214 manmade and natural contaminants such as pesticides, radon, fecal bacteria, and nitrate. The results were as follows:

* In agricultural areas, about 1% of private wells have pesticide levels that exceed human health benchmarks.[283]

* Some pollutants accumulate within living organisms in greater concentrations than in their surrounding environments. This is called bioaccumulation, and it occurs because certain pollutants are not easily excreted or metabolized.[284]

* Bioaccumulative substances are often passed upwards through aquatic food chains, and thus, concentrations of such chemicals tend to be higher in creatures near the top of these food chains, such as salmon and trout.[285] [286]

* A group of bioaccumulative chemicals called PCBs were banned from production in the U.S. in 1979. Due to bioaccumulation, the concentrations of PCBs in fish can range from 2,000 to more than 1,000,000 times higher than the ambient concentrations in waters that the fish inhabit.[287]

* Dioxins are a group of highly toxic bioaccumulative chemicals that are sometimes released through incineration, combustion, and other processes. Due to bioaccumulation, the concentrations of dioxins in fish can range from hundreds to thousands of times higher than the ambient concentrations in waters that the fish inhabit.[288]

* During 2000–2003, the EPA conducted a random survey of fish contamination levels in 500 of the 147,000 lakes and reservoirs in the continental United States. The EPA tested bottom-dwelling and predator fish for 268 chemicals that bioaccumulate. The study found that:

|

Chemical |

EPA’s Limit for Four 8-Ounce Fish Meals Per Month (parts per billion) |

Portion of Water Bodies with Fish Exceeding This Limit |

|

mercury |

300 |

48.8% |

|

PCBs |

12 |

16.8% |

|

dioxins |

0.15 |

7.6% |

|

DDT |

69 |

1.7% |

|

chlordane |

67 |

0.3% |

* During 2003–2006, the EPA conducted a random survey of fish contamination levels at 1,623 locations in coastal waters throughout the continental United States, Southeastern Alaska, American Samoa, and Guam. The EPA tested bottom-dwelling and slower-moving fish (such as shrimp, lobsters, and finfish) for 16 chemical contaminants such as inorganic arsenic, cadmium, and PCBs. The study found that fish did not exceed EPA’s four-meal-per-month contamination limits for any of these chemicals:

* An analysis of 17 studies published by the International Journal of Environmental Research and Public Health in 2018 found that:

* Roughly 13% of U.S. surface waters do not meet state fecal bacteria limits for various uses such as recreation and public water supplies. A technology called microbial source tracking (MST) allows scientists to trace the sources of fecal bacteria.[300] A 2005 EPA report summarizes eight studies conducted in various localities with elevated levels of fecal bacteria.[301] [302] Using MST, it found that the dominant sources were:

* “Acidity” is a measure of a liquid’s ability to chemically alter a substance in a way that can lead to corrosion.[312] [313] [314]

* The acidity of liquids is measured on a scale called pH, which ranges from 0 to 14. Lower pH values indicate higher acidity:[315]

pH Scale

* The pH scale is logarithmic, so a one point change in pH represents a ten-fold change in acidity. [317]

* Acidification is a decrease in pH over time. It does not necessarily mean that a liquid has become an acid (pH < 7).[318] [319] [320]

* Ocean acidification is the term used to describe a decrease in ocean pH as a result of man-made carbon dioxide emissions. When water absorbs carbon dioxide, it becomes more acidic, which decreases its pH.[321] A large decrease in ocean pH could harm certain sea creatures like shellfish and corals, which are foundational to marine ecosystems.[322]

* Carbon dioxide is a generally “colorless, odorless, non-toxic, non-combustible gas.”[323] [324] [325] It is also:

* Since the outset of the Industrial Revolution in the late 1700s,[331] the portion of the earth’s atmosphere that is comprised of carbon dioxide has increased from 0.028% to 0.041%, or by about 48%.[332]

* The mass of the world’s oceans is 270 times greater than that of its atmosphere.[333] The ability of substances to affect or pollute one another is related to their masses. Larger masses are generally less affected because they dilute other substances.[334] [335] [336]

* The National Oceanic and Atmospheric Administration (NOAA) is an agency of the United States Department of Commerce that produces oceanic and atmospheric research.[337]

* Data from the NOAA World Ocean Database shows that the ocean’s average pH has varied as follows since 1910:

* A NOAA webpage states ocean pH measurements prior to 1989 are:

* Within NOAA, some scientists oppose the notion that NOAA’s pre-1989 ocean pH measurements are uninformative. Hernan Garcia—a NOAA oceanographer, director of World Data Service for Oceanography, and U.S. data manager for the International Oceanographic Data and Information Exchange—stated that:

it is too broad to characterize all the older historical pH data as questionable without the benefit of a more in depth analysis. … These historical data are scientifically valuable and cannot be recreated.[340]

* With regard to the accuracy of historical data, global ocean pH averages are generally more consistent during time periods when more measurements are taken:

* Ph.D. oceanographers Richard Feely and Christopher Sabine are University of Washington professors who work for the National Oceanic and Atmospheric Administration (NOAA). They were part of a team of scientists that co-shared the 2007 Nobel Peace Prize with Al Gore for educating people about climate change.[342] [343] [344]

* In 2010, Richard Feely received a Heinz award of $100,000 for his vital role in identifying ocean acidification as global warming’s “evil twin.”[345]

* Feely and Sabine have created computer models to predict ocean pH in the future. They project that ocean acidification will accelerate “to an extent and at rates that have not occurred for tens of millions of years,” which could cause irreversible damage to marine life during this century.[346]

* Per the academic text Flood Geomorphology:

* In 2006, Feely and Sabine published the following chart, which estimates “Historical & Projected” ocean pH using computer models:

* Plotted with NOAA’s historical data, Feely and Sabine’s computer model looks like this:

* In 2013, a hydrologist named Michael Wallace emailed Feely and Sabine about the discrepancies between the historical data and their model. He then filed a Freedom of Information Act request for their underlying data.[350] [351] [352] During this correspondence:

* Per an academic work about data analysis and the “importance of transparency”:

* Per the serial work Implementing Reproducible Research:

* James Cook University in Australia is regarded as “a leader in teaching and research that addresses the critical challenges facing the Tropics.”[364] The Centre of Excellence for Coral Reef Studies—headquartered at this university—is a government-funded research program formed to study coral reef sustainability.[365]

* Scientists from this center conducted several experiments in which they exposed fish to chemically altered water to simulate ocean acidification. These studies report many observations of behavioral impairments, such as attraction to the scents of predators.[366] [367] [368] [369] [370] [371]

* A separate international team of scientists launched a three-year replication study to investigate these results. After analyzing over 900 fishes of six different species, they concluded that findings of behavioral impairment are “not reproducible.” Their paper, published in the journal Nature, states that:

* In reply to the above paper, the scientist who led many of the original studies stated, “you can hardly say you’ve repeated something if you’ve gone and done it in a different way.”[373] He argued that disparate results were because the authors of the replication study failed to:

* With regard to the claims above, the authors of the replication study reported that:

* With further regard to the effects of pollutants on the ability of fish to detect predators:

* In 2019, a scientist named Peter Ridd was awarded $1.2 million in a lawsuit against James Cook University because he was fired after publicly stating that his colleagues’ research “can no longer be trusted.” The ruling was set aside by another court, and Ridd lost an appeal to the High Court of Australia.[390] [391] [392] [393] [394] [395]

* Coral reefs are large rock structures that are home to a vast variety of marine species in shallow, tropical seas. Commonly called the “rainforests of the sea,” these ecosystems sustain a quarter of marine species yet cover less than 1% of the ocean floor.[396] [397] [398]

* Hard corals, or “reef-builders,” are immobile seafloor animals that produce rocky skeletons for structure and protection. These skeletons slowly accumulate beneath them and create massive reefs over time.[399] [400] [401]

* In more acidic water, coral reefs are less dense and therefore more prone to corrosion and structural damage.[402]

* Plant-like organisms called algae live within corals, giving them color and providing them with vital energy.[403] [404] When subjected to severe environmental stress, such as El Nino heat waves, corals will often expel their algae. As a result, reefs sometimes become white.[405] [406] This phenomenon is called “coral bleaching.”[407] [408] [409] [410]

* Coral bleaching raises the risk of mortality but does not mean the coral is dead, as bleached reefs recover when environmental stress is not too severe.[411] [412]

* In 2008, the Center of Excellence for Coral Reef Studies in Australia published a high-profile study that asserted ocean acidification triggered by manmade carbon dioxide emissions causes coral bleaching.[413] [414] The experiment involved placing three common species of corals and algae into tanks flowing with chemically altered water to simulate ocean acidification. The study found that:

* The Australian Institute of Marine Science deems field research more reliable than laboratory experiments for observing the impact of ocean acidification on coral reefs and states:

* In Papua New Guinea, volcanic cracks in the seafloor constantly release carbon dioxide, causing certain reef habitats to exist in perpetually acidified waters. This creates a “natural laboratory” for field research on ocean acidification. The water’s pH value near these cracks can be as low as 7.28,[418] or roughly five times more acidic than the global average over the past decade.[419] A webpage of the Australian Institute of Marine Science that summarizes the results of field research at these sites does not report coral bleaching but mentions:

* “Coral cover” refers to the portion of a reef that is covered with living coral.[423] This is a key measure of coral reef health, much like tree coverage in a tropical forest.[424]

* Major environmental disturbances can cause reefs to lose much of their coral coverage, but they often recover in about ten to thirty years. Newly recovered reefs typically have different combinations of coral species than before they were damaged because some species grow more quickly than others.[425] [426]

* To obtain the longest-term datasets on coral cover, Just Facts wrote to the IPCC’s coral reef specialists, and they provided the following sources:[434]

* In 2021—nine years after the prediction above—the Australian Institute of Marine Science reported that coral cover on the Great Barrier Reef was:

* Per the U.S. Department of Agriculture:

* In 2014, the IPCC published a report that expressed “low confidence” in the theory that human-caused ocean acidification is reducing coral growth rates.[444]

* The Red List of Threatened Species—published by the International Union for Conservation of Nature (IUCN)—is “the world’s most comprehensive information source on the global extinction risk status of animal, fungus and plant species.”[445]

* According to IUCN guidelines, a species can be considered threatened if population size is “projected, inferred, or suspected” to decrease by 30% over a period of ten to 100 years. With regard to data quality, the IUCN Red List criteria:

* In 2008, the journal Science published a high-profile study that used IUCN criteria to assess the extinction risk of 845 reef-building coral species. It stated that about 33% of these species were threatened with extinction, though the estimates “suffer from lack of” long-term data.[447] [448] As of 2022, the IUCN Red List asserts that 33% of reef-building corals are threatened with extinction.[449] [450]

* In 2021, the journal Nature Ecology & Evolution published the first global study of coral population counts. Based on coral abundance data from 1999–2002 and coral cover data from 1997–2006, the study found that:

* Municipal solid waste, also known as trash or garbage, consists of nonhazardous items that are thrown away, such as newspapers, cans, bottles, packaging, clothes, furniture, food scraps, and grass clippings.[453] [454] Municipal solid waste does not include industrial, hazardous, or construction waste.[455]

* The most common types of municipal solid waste (by weight) are paper (23%), food scraps (22%), yard trimmings (12%), plastics (12%), rubber, leather and textiles (9%), metals (9%), wood (6%), glass (4%), and other materials (3%).[456]

* In 2010, roughly 55% to 65% of municipal solid waste was generated by residences, while 35% to 45% was generated by businesses and institutions (like hospitals and schools).[457]

* In 2018, Americans generated about 292 million tons of trash or 4.9 pounds per person per day.[458] Of this, 50% was placed in landfills, 24% was recycled, 12% was burned for energy, and 9% was composted.[459]

* Older landfills were often malodorous, pest-ridden, and laden with noxious pollutants. Modern landfills seldom have such problems and operate under regulations that require controls over the types of trash that can be buried, a daily covering of dirt over the refuse, composite liners, clay caps, and runoff collections systems. Many modern landfills generate energy by collecting and burning methane from decomposing organic materials.[460] [461] [462] [463]

* The average lifecycle of a landfill is about 30–50 years.[464] After this, landfills must be covered and can be used for purposes such as parks, commercial development, golf courses, nature conservatories, ski slopes, and airfields. Hazardous waste dumps can also be used for such purposes.[465] [466] [467] [468]

* The Fresh Kills landfill in Staten Island, NY serviced New York City from 1948 to 2001 and was the largest landfill in the world, consisting of five mounds of trash ranging from 90 to 225 feet. It is currently being converted into “the largest park developed in New York City in over 100 years.” Parts of the park have been opened since 2010 with soccer fields, walking paths, biking paths, playgrounds, basketball courts, and other recreational areas:

* At the current U.S. population growth rate and the current per-person trash production rate, the U.S. will use about 13.3 billion cubic yards of municipal landfill volume over the next 50 years. Given a landfill height of 90 feet, this equates to a square area that is 12 miles long on each side or four one-thousandths of one percent (0.004%) of the country’s land area:

* While citing:

* While citing people who work in waste management, the New York Times reported in 2005 that:

* A scientific, nationally representative survey commissioned in 2019 by Just Facts found that 66% of voters believe that if the U.S. stopped recycling and buried all of its municipal trash for the next 100 years in a single landfill that was 30 feet high, the landfill would cover more than 5% of the nation’s land area.[479] [480] [481] The actual figure is 0.06%.[482]

* In 2018, Americans recycled about 24% of solid municipal waste or 1.2 pounds per person per day.[483]

* In 2018, the recycling rate for:

* Factors that affect the environmental impacts and financial costs associated with recycling include (but are not limited to):

* Factors that determine the financial costs and environmental impacts associated with manufacturing from virgin materials and disposal in landfills include (but are not limited to):

* In the mid-1970s, the EPA concluded that recycling generally produces less pollution than manufacturing from virgin materials.[491]

* In 1989, the U.S. Congress’s Office of Technology Assessment concluded that:

* Environmental impact assessments of curbside recycling have produced conflicting results, such as these:

* Both of the studies cited above (and others) conclude that without governmental subsidies or mandates, curbside recycling is generally more expensive than conventional disposal and manufacturing. The recycling of products made of aluminum is an exception to this generality.[495] [496] [497] [498]

* Various state and local governments have enacted laws and quotas that require mandatory recycling.[499] Examples of such include the states of North Carolina,[500] New Jersey,[501] and Connecticut,[502] the cities of Seattle,[503] San Francisco,[504] and New York,[505] 168 municipalities in Massachusetts,[506] and Monroe County, New York.[507]

* Various nations, localities, and businesses have banned or imposed taxes and surcharges on disposable plastic supermarket bags. Examples of such include: China,[508] Ireland,[509] Seattle, San Francisco, Westport, Connecticut,[510] Washington, D.C.,[511] and Whole Foods Market.[512] [513]

* Assessing the full environmental impacts of different products requires examining all aspects of their production, use, and disposal. To do this, researchers perform “life cycle assessments” or LCAs. Per the U.S. Environmental Protection Agency, LCAs allow for:

* A 2011 study published by the United Kingdom’s Environment Agency evaluated nine categories of environmental impacts caused by different types of supermarket bags, such as plastic, degradable plastic, paper, and reusable cotton totes. The study quantified “all significant life cycle stages from raw material extraction, through manufacture, distribution use and reuse to the final management of the carrier bag as waste.”[515] The study found:

|

Environmental Impact[520] |

Times That the Same Tote Must Be Reused to Have Less Impact Than Disposable Plastic Bags |

|

|

Cotton Tote (expected life is 52 reuses) |

Plastic Polypropylene Tote (expected life is 104 reuses) |

|

|

Global warming potential |

172 |

14 |

|

Abiotic depletion |

94 |

17 |

|

Acidification |

245 |

9 |

|

Eutrophication |

393 |

19 |

|

Human toxicity |

314 |

14 |

|

Fresh water aquatic ecotoxicity |

351 |

7 |

|

Marine aquatic ecotoxicity |

354 |

11 |

|

Terrestrial ecotoxicity |

1,899 |

30 |

|

Photochemical oxidation |

179 |

10 |

* The study did not account for the environmental impacts of washing reusable totes, which is recommended because they can harbor pathogens through meat drippings and other food remnants.[522] [523]

* A 2018 study published by Denmark’s Environmental Protection Agency evaluated 15 categories of environmental impacts caused by different types of supermarket bags, such as paper, plastic, cloth/plastic blend, and cotton. The study quantified the “impacts of providing, using and disposing of” each bag and found the following results:[524]

|

Bag Type |

Times the Same Bag Must Be Reused to Have the Same Impact as a Disposable Plastic Bag |

|

Reusable plastic |

35–84 |

|

Disposable paper |

43 |

|

Reusable cloth/plastic blend |

870 |

|

Reusable cotton |

7,100 |

|

Reusable organic cotton |

20,000 |

* The study did not account for the environmental impacts of washing reusable totes, which is recommended because they can harbor pathogens through meat drippings and other food remnants.[528] [529]

[1] Entry: “pollution.” American Heritage Science Dictionary. Houghton Mifflin, 2005. Page 495.

[2] Book: Biological Risk Engineering Handbook: Infection Control and Decontamination. Edited by Martha J. Boss and Dennis W. Day. CRC Press, 2016.

Chapter 4: “Toxicology.” By Richard C. Pleus, Harriet M. Ammann, R. Vincent Miller, and Heriberto Robles. Pages 97–110.

Page 98:

The maximum dose that results in no adverse effects is called the threshold dose. Many chemical agents have a threshold dose. The concept of threshold implies that concentrations of exposure present are so low that adverse effect cannot be measured. Some notable exceptions occur, such as when a person develops an allergic reaction to chemical (only specific chemicals are capable of causing allergic reactions).

Another exception, although controversial, is chemicals that cause cancer. Given our current lack of understanding of the mechanisms that lead to cancer initiation and development, regulatory agencies have adopted the position that any dose of a carcinogen has an associated risk of developing cancer. Scientifically, not all carcinogens are in fact capable of causing an effect at low doses; however, the problem is that no one knows what the dose must be in order to cause an effect, so to be safe the dose is set as low as practicable (usually at the limit of detection for instrumentation).

[3] Book: Molecular Biology and Biotechnology: A Guide for Teachers (3rd edition). By Helen Kreuzer and Adrianne Massey. ASM [American Society for Microbiology] Press, 2008.

Page 540: “Paracelsus, a Swiss physician who reformed the practice of medicine in the 16th century, said it best: ‘All substances are poisons, there is none which is not a poison. The dose differentiates a poison and a remedy.’ This is a fundamental principle in modern toxicology: the dose makes the poison.”

[4] Book: Chemical Exposure and Toxic Responses. Edited by Stephen K. Hall, Joana Chakraborty, and Randall J. Ruch. CRC Press, 1997.

Pages 4–5:

The relationship between the dose of a toxicant and the resulting effect is the most fundamental aspect of toxicology. Many believe, incorrectly, that some agents are toxic and others are harmless. In fact, determinations of safety and hazard must always be related to dose. This includes a consideration of the form of the toxicant, the route of exposure, and the chronicity [time] of exposure.

[5] Book: Understanding Environmental Pollution (3rd edition). By Marquita K. Hill. Cambridge University Press, 2010.

Pages 60, 62:

Anything is toxic at a high enough dose. … Even water, drunk in very large quantities, may kill people by disrupting the osmotic balance in the body’s cells. … Potatoes make the insecticide, solanine. But to ingest a lethal dose of solanine would require eating 100 pounds (45.4 kg) of potatoes at one sitting. However, certain potato varieties—not on the market—make enough solanine to be toxic to human beings. Generally, potentially toxic substances are found in anything that we eat or drink.

[6] Book: The Johns Hopkins Manual of Gynecology and Obstetrics (3rd edition). Edited by Kimberly B. Fortner. Lippincott Williams & Wilkins, 2007. Chapter 38: “Critical Care.” By Catherine D. Cansino and Pamela Lipsett.

Page 40: “The lungs are protected by a concentrated supply of endogenous antioxidants; however, when there is too much oxygen or not enough of the antioxidants, the lungs may be damaged, as in acute repository distress syndrome (ARDS). … Oxygen therapy with an F102 above 60% for longer than 48 hours is considered toxic.”

[7] Book: Clinical Toxicology: Principles and Mechanisms. By Frank A. Barile. CRC Press, 2004.

Page 3: “What transforms a chemical into a toxin depends more on the length of time of exposure, dose (or concentration) of the chemical, or route of exposure, and less on the chemical structure, product formulation, or intended use of the material.”

[8] Biological Risk Engineering Handbook: Infection Control and Decontamination. Edited by Martha J. Boss and Dennis W. Day. CRC Press, 2016.

Chapter 4: “Toxicology.” By Richard C. Pleus, Harriet M. Ammann, R. Vincent Miller, and Heriberto Robles. Pages 97–110.

Page 98:

The degree of harm or the influencing factors of toxicity are related to:

• Chemical and physical properties of the chemical (or its metabolites)

• Amount of the chemical absorbed by the organism

• Amount of chemical that reaches its target organ of toxicity

• Environmental factors and activity of the exposed subject (e.g., working habits, personal hygiene)

• Duration, frequency, and route of exposure

• Ability of the organism to protect itself from a chemical

[9] Book: 1999 Toxics Release Inventory: Public Data Release. U.S. Environmental Protection Agency, April 2001.

Page 1-11:

Some high-volume releases of less toxic chemicals may appear to be a more serious problem than lower-volume releases of more toxic chemicals, when just the opposite may be true. For example, phosgene is toxic in smaller quantities than methanol. A comparison between these two chemicals for setting hazard priorities or estimating potential health concerns, solely on the basis of volumes released, may be misleading. …

The longer the chemical remains unchanged in the environment, the greater the potential for exposure. Sunlight, heat, or microorganisms may or may not decompose the chemical. … As a result, smaller releases of a persistent, highly toxic chemical may create a more serious problem than larger releases of a chemical that is rapidly converted to a less toxic form.

NOTE: Credit for bringing this source to attention belongs to Steven F. Hayward of the Pacific Research Institute. (“2011 Almanac of Environmental Trends.” April 2011. <www.pacificresearch.org>)

[10] Book: Molecular Biology and Biotechnology: A Guide for Teachers (3rd edition). By Helen Kreuzer and Adrianne Massey. ASM [American Society for Microbiology] Press, 2008.

Pages 540–541:

The factors driving your concept of risk—emotion or fact—may or may not seem particularly important to you, yet they are. The risks you are willing to assume and the experiences or products you avoid because of faulty assumptions and misinformation affect the quality of your life and the lives of those around you. Thus, even though it may be tempting to let misperceptions and emotions shape your ideas about risky products and activities, there are risks in misperceiving risks.

[11] Book: Molecular Biology and Biotechnology: A Guide for Teacher (3rd edition). By Helen Kreuzer and Adrianne Massey. ASM [American Society for Microbiology] Press, 2008.

Page 540:

Many people are frightened by the use of synthetic chemicals on food crops because they have heard that these chemicals are “toxic” and “cancer causing,” but are all synthetic chemicals more harmful than substances people readily ingest, like coffee and soft drinks? No (Table 37.2). For example, in a study to assess the toxicities of various compounds, half of the rats died when given 233 mg of caffeine per kg of body weight, but it took more than 10 times that amount of glyphosate (4,500 mg glyphosate/kg body weight), which is the active ingredient in the herbicide Roundup, to cause the same percentage of deaths as 233 mg of caffeine.

Table 3.2 Carcinogenic Substances

|

Substance |

Carcinogenic Potential * |

|

Red wine |

5.0 |

|

Beer |

3.0 |

|

Edible mushrooms |

0.1 |

|

Peanut butter |

0.03 |

|

Chlorinated water |

0.001 |

|

Polychlorinated biphenyls (PCBs) |

0.0002 |

* The higher the number, the greater the cancer-causing potential. The carcinogenic potential of peanut butter is due to the toxin aflatoxin, produced by a mold that commonly infects peanuts and other crops.

[12] Website: “Caffeine: Acute Effects, Page 2 of 43 Items.” Pubchem, National Center for Biotechnology Information, U.S. Department of Health and Human Services. Accessed February 27, 2020 at <pubchem.ncbi.nlm.nih.gov>

The results from acute animal tests and/or acute human studies are presented in this section. Acute animal studies consist of LD50 [lethal dose] and LC50 [lethal concentration] tests, which present the median lethal dose (or concentration) to the animals. Acute human studies usually consist of case reports from accidental poisonings or industrial accidents. These case reports often help to define the levels at which acute toxic effects are seen in humans. …

Organism [=] rat … Test Type [=] LD50 … Route [=] oral … Dose [=] 192 mg/kg (192 mg/kg) … Effect [=] Brain and Coverings: Other Degenerative Changes; Behavioral: Withdrawal; Kidney, Ureter, and Bladder: Interstitial Nephritis … Reference [=] … Journal of New Drugs., 5(252), 1965

[13] Report: “Toxicological Profile for Glyphosate.” U.S. Department of Health & Human Services, Agency for Toxic Substances and Disease Registry, August 2020. <www.atsdr.cdc.gov>

An acute oral LD50 [lethal dose to 50% of test animals] value of 4,320 mg/kg/day was reported following single oral dosing of rats with glyphosate technical (EPA 1992b). In a developmental toxicity study, 6/25 pregnant rats died during oral dosing of glyphosate technical at 3,500 mg/kg/day; there were no deaths during treatment at 1,000 mg/kg/day (EPA 1992e). No adequate sources were located regarding death in laboratory animals exposed to glyphosate technical by inhalation or dermal routes.

[14] Book: Chemical Exposure and Toxic Responses. Edited by Stephen K. Hall, Joana Chakraborty, and Randall J. Ruch. CRC Press, 1997.

Pages 4–5: “The relationship between the dose of a toxicant and the resulting effect is the most fundamental aspect of toxicology. Many believe, incorrectly, that some agents are toxic and others are harmless. In fact, determinations of safety and hazard must always be related to dose. This includes a consideration of the form of the toxicant, the route of exposure, and the chronicity [time] of exposure.”

[15] Calculated with data from:

a) Webpage: “Caffeine Content for Coffee, Tea, Soda and More.” Mayo Clinic. Accessed July 5, 2018 at <www.mayoclinic.org>

“The charts below show typical caffeine content in popular beverages. Drink sizes are in fluid ounces …. Caffeine is shown in milligrams (mg). … Coffee drinks [=] Brewed … Size in oz. [=] 8 … Caffeine (mg) [=] 95–165”

b) Paper: “The Weight of Nations: An Estimation of Adult Human Biomass.” By Sarah Catherine Walpole. BMC Public Health, 2012. <bmcpublichealth.biomedcentral.com>

Page 3: “Average body mass globally was 62 kg.”

c) Book: Molecular Biology and Biotechnology: A Guide for Teacher (3rd edition). By Helen Kreuzer and Adrianne Massey. ASM [American Society for Microbiology] Press, 2008.

Page 540: “[I]n a study to assess the toxicities of various compounds, half of the rats died when given 233 mg of caffeine per kg of body weight….”

CALCULATIONS:

[16] Article: “Too Much Caffeine Caused Spring Hill Student’s Death.” By Teddy Kulmala and Cynthia Roldán. The State, May 16, 2017. <www.thestate.com>

A 16-year-old Spring Hill High School student who collapsed in a classroom last month died from ingesting too much caffeine, the county coroner said Monday.

The official cause of death for Davis Allen Cripe was a “caffeine-induced cardiac event causing a probable arrhythmia,” said Richland County Coroner Gary Watts. It was the result of the teen ingesting the caffeine from a large Diet Mountain Dew, a cafe latte from McDonald’s and an energy drink over the course of about two hours, Watts said. …

Davis had purchased the latte at a McDonald’s around 12:30 p.m. April 26, Watts said. He consumed the Diet Mountain Dew “a little time after that” and the energy drink sometime after the soda.

[17] Calculated with data from:

a) Paper: “An Assessment of Dietary Exposure to Glyphosate Using Refined Deterministic and Probabilistic Methods.” By C.L. Stephenson and C.A. Harris. Food and Chemical Toxicology, September 2016. Pages 28–41. <www.sciencedirect.com>

Page 40: “Overall, the TMDI [theoretical maximum daily intake] can be useful as a screening tool to rapidly identify potential risks to the consumer, but it can be demonstrated that it overestimates actual exposure and does not give a realistic estimate of dietary exposure. By systematic use of refinements, such as substituting MRLs [maximum residue levels] for median residue levels and the use of processing and residues monitoring information, the total modelled exposure to glyphosate is reduced by a factor of 67. This estimate could be refined further by additional monitoring or processing data, or using refined modelling based on the probabilistic method developed by the EFSA [European Food Safety Authority] Panel on Plant Protection Products and their Residues (EFSA PPR, 2012). The refined chronic dietary intake of glyphosate for the critical EU diet (Irish adult), using the deterministic approaches employed in PRIMo [Pesticide Residue Intake Model] rev. 2, was 0.0061 mg/kg bw/day, or 1.2% of the ADI [acceptable daily intake] of 0.5 mg/kg bw/day. The exposure level at which no adverse effect was seen (i.e., the no-observed-adverse-effect level, or NOAEL), in the studies used to derive the ADI, was approximately 8200 times higher than this refined chronic dietary intake. Indicative probabilistic calculations, based on the EFSA PPR guidance, show that the actual chronic dietary exposure is likely to be even lower (<0.0045 mg/kg bw/day; P99.9). In 2004, the JMPR [Joint FAO/WHO Meeting on Pesticide Residues] established an ADI of 1 mg/kg bw/day (WHO/FAO, 2004), [World Health Organization/Food and Agriculture Organization of the United Nations] and in 2006, the US EPA [U.S. Environmental Protection Agency] set a chronic population-adjusted dose (cPAD) of 1.75 mg/kg. Since the EU [European Union] ADI has been used in these risk assessments, it represents the most conservative assumptions regarding the endpoint, globally.”

b) Paper: “The Weight of Nations: An Estimation of Adult Human Biomass.” By Sarah Catherine Walpole. BMC Public Health, 2012. <bmcpublichealth.biomedcentral.com>

Page 3: “Average body mass globally was 62 kg.”

c) Book: Molecular Biology and Biotechnology: A Guide for Teacher (3rd edition). By Helen Kreuzer and Adrianne Massey. ASM [American Society for Microbiology] Press, 2008.

Page 540: “[I]n a study to assess the toxicities of various compounds, half of the rats died when given 233 mg of caffeine per kg of body weight, but it took more than 10 times that amount of glyphosate (4,500 mg glyphosate/kg body weight), which is the active ingredient in the herbicide Roundup, to cause the same percentage of deaths as 233 mg of caffeine.”

Report: “Toxicological Profile for Glyphosate.” U.S. Department of Health & Human Services, Agency for Toxic Substances and Disease Registry, August 2020. <www.atsdr.cdc.gov>

“An acute oral LD50 [lethal dose] value of 4,320 mg/kg/day was reported following single oral dosing of rats with glyphosate technical (EPA 1992b).”

CALCULATION: 4,320 mg glyphosate per kg of body mass lethal dosage / 0.0045 mg glyphosate consumption per kg of body mass per day = 960,000 times increase to reach lethal dosage

[18] Website: “Glyphosate: Acute Effects.” Pubchem, National Center for Biotechnology Information, U.S. Department of Health and Human Services. Accessed February 27, 2020 at <pubchem.ncbi.nlm.nih.gov>

The results from acute animal tests and/or acute human studies are presented in this section. Acute animal studies consist of LD50 [lethal dose] and LC50 [lethal concentration] tests, which present the median lethal dose (or concentration) to the animals. Acute human studies usually consist of case reports from accidental poisonings or industrial accidents. These case reports often help to define the levels at which acute toxic effects are seen in humans. …

Organism [=] rat … Test Type [=] LD50 … Route [=] oral … Dose [=] 4873 mg/kg (4873 mg/kg) … Effect [=] Behavioral: Convulsions or Effect on Seizure Threshold; Lungs, Thorax, or Respiration: Respiratory Stimulation … Reference [=] … Toxicology and Applied Pharmacology., 45(319), 1978

[19] Article: “Chemophobia in Europe and Reasons for Biased Risk Perceptions.” By Michael Siegrist and Angela Bearth. Nature Chemistry, November 7, 2019. Pages 1071–1072. <www.dnamedialab.it>

Pages 1071–1072:

To better understand consumers’ knowledge and risk perception related to chemicals, we conducted a survey across eight European countries: Austria, France, Germany, Italy, Poland, Sweden, Switzerland and the United Kingdom2. There were a total of 5,631 participants, with roughly 700 from each country. …

It only requires the presence of a small amount of a substance that is seen to be unnatural—and thus associated with negative outcomes—to have a significant effect on perceived naturalness8 or perceived risk2. That people rely only on the act of contamination (or contagion) when assessing the properties of a given substance, while ignoring the quantity of that substance, can be referred to as the contagion heuristic. Relying on this heuristic, laypeople show a surprisingly robust insensitivity to dose–response relationships2,9. For many people, a chemical substance is simply viewed as being either safe or dangerous; the link between any potential hazard to human health and the exposure route or dosage is not appreciated. For example, fewer than a quarter of respondents in our survey correctly agreed that a small amount of a toxic chemical substance in a consumer product is not necessarily harmful2. Thus, there exists a fundamental conflict between people’s insensitivity to dose–response relationships and the fact that there are safe limits of exposure to a toxic chemical substance. …

Fig.1b: Reponses to two questions designed to gauge the chemical knowledge of the consumers taking part in the survey. In each case the results are shown for the pooled sample across eight countries and the results are taken from the study reported in ref.2 … Knowledge of European consumers (n = 5,631) …

The chemical structure of the synthetically produced salt (NaCI) is exactly the same as that of salt found naturally in the sea … Correct response [=] 18% … Incorrect response [=] 32% … Don’t know [=] 50%

Being exposed to a toxic synthetic chemical substance is always dangerous, no matter what the level of exposure is … Correct response [=] 9% … Incorrect response [=] 76% … Don’t know [=] 15%

[20] Article: “Scientific Survey Shows Voters Across the Political Spectrum Are Ideologically Deluded.” By James D. Agresti. Just Facts, April 16, 2021. <www.justfacts.com>

The survey was conducted by Triton Polling & Research, an academic research firm that serves scholars, corporations, and political campaigns. The responses were obtained through live telephone surveys of 1,000 likely voters across the U.S. during November 4–11, 2020. This sample size is large enough to accurately represent the U.S. population. Likely voters are people who say they vote “every time there is an opportunity” or in “most” elections.

The margin of sampling error for all respondents is ±3% with at least 95% confidence. The margins of error for the subsets are 5% for Biden voters, 5% for Trump voters, 4% for males, 5% for females, 9% for 18 to 34 year olds, 4% for 35 to 64 year olds, and 5% for 65+ year olds.

The survey results presented in this article are slightly weighted to match the ages and genders of likely voters. The political parties and geographic locations of the survey respondents almost precisely match the population of likely voters. Thus, there is no need for weighting based upon these variables.

NOTE: For facts about what constitutes a scientific survey and the factors that impact their accuracy, visit Just Facts’ research on Deconstructing Polls & Surveys.

[21] Dataset: “Just Facts 2020 U.S. Nationwide Survey.” Just Facts, November 2020. <www.justfacts.com>

Page 4:

Q18. Do you believe that contact with a toxic chemical is always dangerous, no matter what the level of exposure?

Yes [=] 65.0%

No [=] 31.3%

Unsure [=] 3.4%

Refused [=] .3%

[22] For facts about how surveys work and why some are accurate while others are not, click here.

[23] Letter to the editor: “Reply to Comments: On the Relationship of Toxicity and Carcinogenicity.” By Lauren Zeise, Edmund A.C. Crouch, and Richard Wilson. Risk Analysis, December 1985. Pages 265–270. <onlinelibrary.wiley.com>

Page 265:

[W]e began a systematic study of the chemicals tested by the National Cancer Institute (NCI),2 and National Toxicology Program (NTP).3 We detail the analysis and results elsewhere.5,11 …

The NCI/NTP tests are designed to find “carcinogens,” so the doses used are the highest which can be tolerated without causing early death or certain other (noncarcinogenic) adverse effects. Two results are clear in the NCI/NTP series:

1. No chemical in this series induced tumors in all dosed animals. This result would certainly be expected if some low toxicity chemical had the high potency of TCDD [tetrachlorodibenzo-p-dioxin]. There are only a few chemicals for which almost 100% tumor incidence occurred in one or more of the species/sex combination tested, and where the lack of 100% incidence may be due to high early mortality. Examples are carbon tetrachloride, dibromochloropropane, and 4,4’-thiodianiline.

2. A chemical was more likely to exhibit carcinogenicity if a clear toxic effect was elicited. The NCI/NTP experiments were run as close to a maximum tolerated dose (MTD) as could be achieved, but the actual toxicity of the applied doses varied from experiment to experiment. We found that chemicals tested at a maximum dose which did not elicit a toxic effect (early deaths or a weight depression) rarely induced a significant increase in tumor rate. This is shown in Table I for male rats, and similar results were found in preliminary analysis of results in female rats.

These results, taken together, show that chronic toxicity and carcinogenicity are related.

[24] Letter to the editor: “Reply to Comments: On the Relationship of Toxicity and Carcinogenicity.” By Lauren Zeise, Edmund A.C. Crouch, and Richard Wilson. Risk Analysis, December 1985. Pages 265–270. <onlinelibrary.wiley.com>

Page 265: “After analyzing approximately 200 results of animal cancer bioassays, we were struck by the infrequency with which relatively nontoxic chemicals exhibit potent carcinogenic effects.”

[25] Book: New Risks: Issues and Management. Edited by Louis A. Cox and Paolo F. Ricci. Springer, 1990.

Chapter: “Carcinogenicity Versus Acute Toxicity: Is There a Relationship?” By Bernhard Metzger, Edmund Crouch, and Richard Wilson. Pages 77–85. <link.springer.com>

Page 77:

Carcinogenic potency is compared in rodents with acute toxicity for a group of chemicals (155) which were tested independently of and mostly before the NCI/NTP [National Cancer Institute/National Toxicology Program] program. For the entire data set and several subsets, we find partially biased statistically significant linear relationships between potency and the inverse of LD50 [lethal dose, 50%]. On average, the chemicals studied outside the NCI/NTP program are more carcinogenic compared to their acute toxicity than the NCI/NTP chemicals. Analysis shows a clear unbiased upper bound for carcinogenic potency. The correlation between potency and acute toxicity is robust with respect to species and route of administration. We find good agreement between oral and inhalation experiments, for toxicities and carcinogenicities.

Page 84:

Observed Correlations

The results suggest that little bias is introduced when mixing species (rats, mice) in TD5O [toxic dose, 50%]-LD50 regressions. Similarly, they indicate that oral data may be used as surrogates for inhalation data and vice versa (Zeise and others, 1984; Tancrede and others, 1986).

For large samples (n>50), a very nearly linear relationship (unit slope in log-log space) is found between carcinogenic potency and acute toxicity. The factor of proportionality, 1/D, depends on the particular data set used. Bias arises due to the fact that neither the NCI/NTP data nor the non-NCI/NTP data represent a random sample of a population of chemicals, but contain chemicals whose potency and acute toxicity are probably far above the median values of the population of, say, all chemicals in RTECS [Registry of Toxic

Effects of Chemical Substances]. Any inference drawn on the basis of the statistics of these samples can thus not simply be extrapolated to the universe of chemicals.

[26] Book: New Risks: Issues and Management. Edited by Louis A. Cox and Paolo F. Ricci. Springer, 1990.

Chapter: “Carcinogenicity Versus Acute Toxicity: Is There a Relationship?” By Bernhard Metzger, Edmund Crouch, and Richard Wilson. Pages 77–85. <link.springer.com>

Page 84: “The correlation between [cancer-causing] potency and acute toxicity appears largely independent of species [mice or rats] and route of administration.”

[27] Report: “Carcinogens and Anticarcinogens in the Human Diet: A Comparison of Naturally Occurring and Synthetic Substances.” National Research Council, Committee on Comparative Toxicity of Naturally Occurring Carcinogens. National Academy Press, 1996.

Chapter 5: “Risk Comparisons.” <www.ncbi.nlm.nih.gov>

Correlation Between Cancer Potency and Other Measures of Toxicity

Several investigators have noted a strong correlation between the TD50 [“the level of exposure resulting in an excess lifetime cancer risk of 50%”] and the MTD [Maximum Tolerated Dose] (Bernstein and others 1985, Gaylor, 1989, Krewski and others 1989, Reith and Starr 1989, Freedman and others 1993).

Krewski and others (1989) noted that the values of q1* derived from the linearized multistage model fitted to 263 data sets were also highly correlated with the maximum doses. As with the TD50, this association between q1* and the MTD occurs as a result of the limited range of values that q1* can assume once the MTD is established. This correlation is illustrated in Figure 5-3 using the same data presented in Figure 5-2. As indicated in Figure 5-3, there is a strong negative correlation between q1 and the MTD. Thus, the MTD has a strong influence on measures of carcinogenic potency at both high and low doses. …

The relationship between acute toxicity and carcinogenic potency has been the subject of several investigations. Parodi and others (1982) found a significant correlation (r = 0.49) between carcinogenic potency and acute toxicity. Zeise and others (1982, 1984, 1986) reported a high correlation between acute toxicity, as measured by the LD50 [lethal dose, 50%], and carcinogenic potency. Metzger and others (1989) reported somewhat lower correlations (r = 0.6) between the LD50 and TD50 [toxic dose, 50%] for 264 carcinogens selected from the CPDB [Carcinogenic Potency Database]. McGregor (1992) calculated the correlation between the TD50 and LD50 for different classes of carcinogens considered by IARC [International Agency for Research on Cancer]. The highest correlations were observed in IARC Group 1 carcinogens (i.e., known human carcinogens) with r = 0.72 for mice and r = 0.91 for rats, based on samples of size 9 and 8, respectively. Goodman and Wilson (1992) calculated the correlation between the TD50 and LD50 for 217 chemicals that they classified as being either genotoxic or nongenotoxic. The correlation coefficient for genotoxic chemicals was approximately r = 0.4 regardless of whether rats or mice were used, whereas the correlation coefficient for nongenotoxic chemicals was approximately r = 0.7. Haseman and Seilkop (1992) showed that chemicals with low MTDs (i.e., high toxicity) were somewhat more likely to be rodent carcinogens that chemicals with high MTDs, but this association was limited primarily to gavage studies.

[28] Report: “The Plain English Guide to the Clean Air Act.” U.S. Environmental Protection Agency, Office of Air Quality Planning and Standards, April 2007. <www.epa.gov>

Page 4:

Six common air pollutants (also known as “criteria pollutants”) are found all over the United States. They are particle pollution (often referred to as particulate matter), ground-level ozone, carbon monoxide, sulfur oxides, nitrogen oxides, and lead. These pollutants can harm your health and the environment, and cause property damage. …

EPA [U.S. Environmental Protection Agency] calls these pollutants “criteria” air pollutants because it regulates them by developing human health-based and/or environmentally-based criteria (science-based guidelines) for setting permissible levels. The set of limits based on human health is called primary standards. Another set of limits intended to prevent environmental and property damage is called secondary standards. A geographic area with air quality that is cleaner than the primary standard is called an “attainment” area; areas that do not meet the primary standard are called “nonattainment” areas.

[29] Webpage: “What Are the Six Common Air Pollutants?” U.S. Environmental Protection Agency. Last updated September 18, 2015. <www.epa.gov>

“For each of these [criteria] pollutants, EPA [U.S. Environmental Protection Agency] tracks two kinds of air pollution trends: air concentrations based on actual measurements of pollutant concentrations in the ambient (outside) air at selected monitoring sites throughout the country, and emissions based on engineering estimates of the total tons of pollutants released into the air each year.”

[30] U.S. Code, Title 42, Chapter 85, Subchapter I, Part A, Section 7403: “Research, Investigation, Training, and Other Activities.” Accessed February 13, 2024 at <www.law.cornell.edu>

(a) Research and Development Program for Prevention and Control of Air Pollution

The Administrator shall establish a national research and development program for the prevention and control of air pollution….

(c) Air Pollutant Monitoring, Analysis, Modeling, and Inventory Research

In carrying out subsection (a), the Administrator shall conduct a program of research, testing, and development of methods for sampling, measurement, monitoring, analysis, and modeling of air pollutants.

[31] U.S. Code, Title 42, Chapter 85, Subchapter I, Part A, Section 7408: “Air Quality Criteria and Control Techniques.” Accessed February 13, 2024 at <www.law.cornell.edu>

(a) Air Pollutant List; Publication and Revision by Administrator; Issuance of Air Quality Criteria for Air Pollutants

(1) For the purpose of establishing national primary and secondary ambient air quality standards, the Administrator shall within 30 days after December 31, 1970, publish, and shall from time to time thereafter revise, a list which includes each air pollutant—

(A) emissions of which, in his judgment, cause or contribute to air pollution which may reasonably be anticipated to endanger public health or welfare;

(B) the presence of which in the ambient air results from numerous or diverse mobile or stationary sources; and

(C) for which air quality criteria had not been issued before December 31, 1970 but for which he plans to issue air quality criteria under this section.

[32] Report: “EPA’s Regulation of Coal-Fired Power: Is a ‘Train Wreck’ Coming?” By James E. McCarthy and Claudia Copeland. Congressional Research Service, August 8, 2011. <www.fas.org>

Pages 17–18:

In essence, NAAQS [National Ambient Air Quality Standards] are standards that define what EPA [U.S. Environmental Protection Agency] considers to be clean air. Their importance stems from the long and complicated implementation process that is set in motion by their establishment. Once NAAQS have been set, EPA, using monitoring data and other information submitted by the states to identify areas that exceed the standards and must, therefore, reduce pollutant concentrations to achieve them. State and local governments then have three years to produce State Implementation Plans which outline the measures they will implement to reduce the pollution levels in these “nonattainment” areas. Nonattainment areas are given anywhere from three to 20 years to attain the standards, depending on the pollutant and the severity of the area’s pollution problem.

EPA also acts to control many of the NAAQS pollutants wherever they are emitted through national standards for certain products that emit them (particularly mobile sources, such as automobiles) and emission standards for new stationary sources, such as power plants.

In the 1970s, EPA identified six pollutants or groups of pollutants for which it set NAAQS.41 But that was not the end of the process. When it gave EPA the authority to establish NAAQS, Congress anticipated that the understanding of air pollution’s effects on public health and welfare would change with time, and it required that EPA review the standards at five-year intervals and revise them, as appropriate.

[33] Final rule: “Primary National Ambient Air Quality Standards for Nitrogen Dioxide; Final Rule (Part III).” Federal Register, February 9, 2010. <www3.epa.gov>

Page 6478: “NAAQS [National Ambient Air Quality Standards] decisions can have profound impacts on public health and welfare, and NAAQS decisions should be based on studies that have been rigorously assessed in an integrative manner not only by EPA [U.S. Environmental Protection Agency] but also by the statutorily mandated independent advisory committee, as well as the public review that accompanies this process.”

[34] Webpage: “Criteria Air Pollutants.” U.S. Environmental Protection Agency. Last updated November 30, 2023. <www.epa.gov>

The Clean Air Act requires EPA [U.S. Environmental Protection Agency] to set National Ambient Air Quality Standards (NAAQS) for six commonly found air pollutants known as criteria air pollutants. …

Criteria Air Pollutant Information

• Ozone

• Particulate Matter

• Carbon Monoxide

• Lead

• Sulfur Dioxide

• Nitrogen Dioxide

[35] U.S. Code, Title 42, Chapter 85, Subchapter I, Part A, Section 7409: “National Primary and Secondary Ambient Air Quality Standards.” Accessed February 13, 2024 at <www.law.cornell.edu>

(a) Promulgation

(1) The Administrator—

(A) within 30 days after December 31, 1970, shall publish proposed regulations prescribing a national primary ambient air quality standard and a national secondary ambient air quality standard for each air pollutant for which air quality criteria have been issued prior to such date; and

(B) after a reasonable time for interested persons to submit written comments thereon (but no later than 90 days after the initial publication of such proposed standards) shall by regulation promulgate such proposed national primary and secondary ambient air quality standards with such modifications as he deems appropriate.